Database Snapshot Testing: Validating Data Pipeline Changes with DuckDB

Imagine you are developing a data-intensive application where mistakes are extra unacceptable.

Excited, you propose to refactor and optimize the code. Better performance, better memory usage, and cleaner code.

Your project manager asked whether that would break anything.

Failure is not an option.

I had to show him it was safe and that the current (correct) behavior won’t change and that there are no silent errors affecting the final output.

To ensure code changes won’t introduce regressions, we could adopt a systematic testing approach like shadow testing or snapshot testing.

I opted for a simple, SQL-based symmetric diff over full data reconciliation (for snapshot testing) or complex deployment setups (for shadow testing).

General idea:

-

Using production code, run a fresh migration, and run an automated test suite that populates the outputs table.

-

Export that table’s data to a file. This is our baseline snapshot.

-

Switch to the dev branch with the updated codebase.

-

Redo the process, generating a new file. This is the updated snapshot.

-

Compare the updated snapshot with the baseline snapshot.

-

If they are the same, nothing was affected. Great.

-

Otherwise, something’s off and it needs to be debugged.

Flow

PS: You can test code snippets via DuckDB Shell.

Snapshot testing

Think of it as taking a screenshot of your output and locking it in time.

Next time you change the code and rerun it, you take another screenshot and compare.

If they match, the behavior didn’t change. Great.

If they don’t, something did. Something’s off.

I first learned about this technique when using Jest. It was mostly for UI—diffing rendered HTML trees.

But the idea is broader: freeze a known-good output, then diff later runs against it.

There are some general-purpose snapshot testing libraries in C#, in Swift, in Rust, etc.

It’s simple and effective.

Symmetric difference

Consider the following datasets:

-

Alice’s Adventures in Wonderland characters:

- Alice

- The White Rabbit

-

Through the Looking-Glass characters:

- Alice

- Red Queen

To find the difference between them:

WITH

dataset_1 AS (

SELECT * FROM unnest(ARRAY[

'Alice',

'The White Rabbit'

]) AS characters(name)

),

dataset_2 AS (

SELECT * FROM unnest(ARRAY[

'Alice',

'Red Queen'

]) AS characters(name)

)

-- Only in dataset 1 but not in 2: The White Rabbit

(SELECT * FROM dataset_1

EXCEPT

SELECT * FROM dataset_2)

UNION

-- Only in dataset 2 but not in 1: Red Queen

(SELECT * FROM dataset_2

EXCEPT

SELECT * FROM dataset_1)

Expected output:

- The White Rabbit

- Red Queen

For our use case, we only care whether there are differences or not, so we can wrap the whole query in an EXISTS check:

SELECT EXISTS(

WITH

dataset_1 AS (

SELECT * FROM unnest(ARRAY[

'Alice',

'The White Rabbit'

]) AS characters(name)

),

dataset_2 AS (

SELECT * FROM unnest(ARRAY[

'Alice',

'Red Queen'

]) AS characters(name)

)

-- Only in dataset 1 but not in 2: The White Rabbit.

(SELECT * FROM dataset_1

EXCEPT

SELECT * FROM dataset_2)

UNION

-- Only in dataset 2 but not in 1: Red Queen

(SELECT * FROM dataset_2

EXCEPT

SELECT * FROM dataset_1)

) AS different

The result will be a single row with different set true.

DuckDB

Think of it as SQLite++.

It works fine with PostgreSQL and Parquet files.

Parquet support

COPY

(SELECT 'Alice' AS "name", 18 AS "age")

TO 'test.parquet'

(FORMAT parquet);

You can preview the output file test.parquet using Parquet Viewer.

PostgreSQL connector

DuckDB has a core extension to connect to PostgreSQL (our app database) for reading and writing.

For example:

INSTALL postgres;

LOAD postgres;

ATTACH 'dbname=wonderworks user=hatter password=mushrooms host=127.0.0.1' AS db (TYPE postgres, SCHEMA 'public');

COPY

(SELECT * FROM db.computed_values)

TO 'baseline.parquet'

(FORMAT parquet)

WITH

dataset_1 AS (

SELECT FROM 'baseline.parquet'

),

dataset_2 AS (

SELECT FROM 'updated-outputs.parquet'

)

-- COMPARE DATASETS AS WE DID EARLIER...

Practical considerations

-

Why DuckDB and Apache Parquet?

- Our data (

computed_valuesin this example) is columnar, so a column-oriented data file format works best for storing/compressing/querying data. - DuckDB’s Parquet support makes snapshotting trivial.

- DuckDB and its extensions work in Node.js. That’s what we use.

- Our data (

-

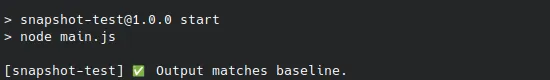

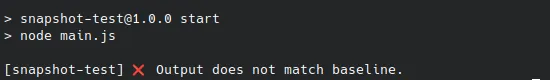

The script exits with the right codes (

0or1), so it could be used in CI/CD for automated testing or even locally pre-commit.

// In package.json

"scripts": {

"test:snapshot": "node snapshot-test.js",

"test:snapshot:reset": "rm results/baseline.parquet && npm run test:snapshot"

// Delete snapshots files older than 7 days

"test:snapshot:cleanup": "find results/ -name 'updated-*.parquet' -mtime +7 -delete"

}

- To help debug unexpected changes, compare the baseline to the latest timestamped snapshot.

Assumptions and limitations

-

Nulls are not distinct. Because that’s how set operations (e.g.

EXCEPT) work. -

There are no duplicate rows and, if they exist, they do not matter.

-

There are no non-deterministic columns (e.g. timestamps like

created_at). If there are, we could treat them by:- Redaction: Not selecting them (

SELECT product_id, quantity FROM ...) or overriding them (SELECT *, NULL AS created_at FROM ...). - Faking/mocking them using something like:

- Redaction: Not selecting them (

-

The table is small enough to snapshot fully and diff quickly.

-

The database schema (and specifically that table) won’t change between versions.

- We could add a schema validation step before content diffing.

-

Character encoding/collation is the same or, if different, they do not affect equality checks (think

en_US.utf8vsC.UTF-8).

Conclusion

Snapshot testing won’t catch why things changed. But it will tell you that they did.

And that’s exactly what you want when you’re refactoring or optimizing code that has to be correct.

DuckDB gives us the tools to make it fast, reproducible, and portable.

No magic, just SQL.

Give it a baseline, run your tests, and check the diff.

Because sometimes, no difference is the best result you can get.

See snapshot-test in https://github.com/djalilhebal/project-sigma

References

- Parquet Viewer

Snapshot testing libraries

-

https://github.com/VerifyTests/Verify

- “VerifyTests/Verify: Verify is a snapshot testing tool that simplifies the assertion of complex data models and documents.”

- C#.

-

https://github.com/pointfreeco/swift-snapshot-testing

- “Delightful Swift snapshot testing.”

- Swift.

-

https://github.com/mitsuhiko/insta

- Rust.

END.